You must log in or register to comment.

Can anybody explain to me why Apple would fund/sponsor this?

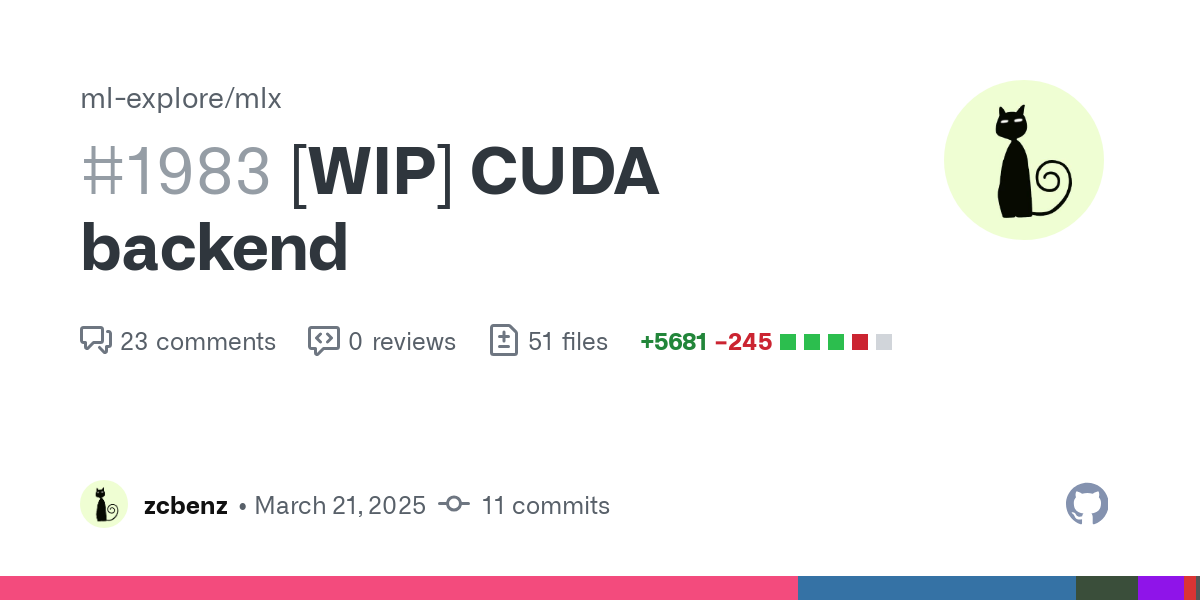

This is enabling MLX models to run on things that aren’t apple cpus. Otherwise the format is of no use to anyone not using apple hardware, which is a large portion of the LLM community, and computer users in general.

A few guesses:

-

It’s still much faster to use a high-end Nvidia GPU vs M-class processors for training. CUDA for training, M or A-class for inference using Apple’s CoreML framework.

-

Ability to run MLX models (like Apple’s foundation models) on CUDA devices for inference. A bit like when they released iTunes for Windows. This way, MLX has a chance at becoming a universal format.

-

Let Apple use Nvidia clusters on their cloud for Private Cloud.

-