So was I. “Yes, and”, as we say in improv. (I’ve never done improv.)

TheTechnician27

“Falsehood flies, and truth comes limping after it, so that when men come to be undeceived, it is too late; the jest is over, and the tale hath had its effect: […] like a physician, who hath found out an infallible medicine, after the patient is dead.” —Jonathan Swift

- 32 Posts

- 765 Comments

Yeah, hence “a translator”. You didn’t think I meant from Renaissance-era Italian to modern Japanese, did you? No one person could probably do that. I meant a translator from this plane to the next.

22·12 hours ago

22·12 hours agoOkay, fuck, he got me, that’s really funny. Good job. Now please submit to The Hague, confess everything, and spend the rest of your life in prison.

Fun fact: woodlice are terrestrial isopods, meaning they share a class (Malacostraca, the second-largest crustacean class after Insecta) with the decapods like crabs, shrimp, etc. Orders Isopoda and Decapoda are far away within the class, but they’re still in there!

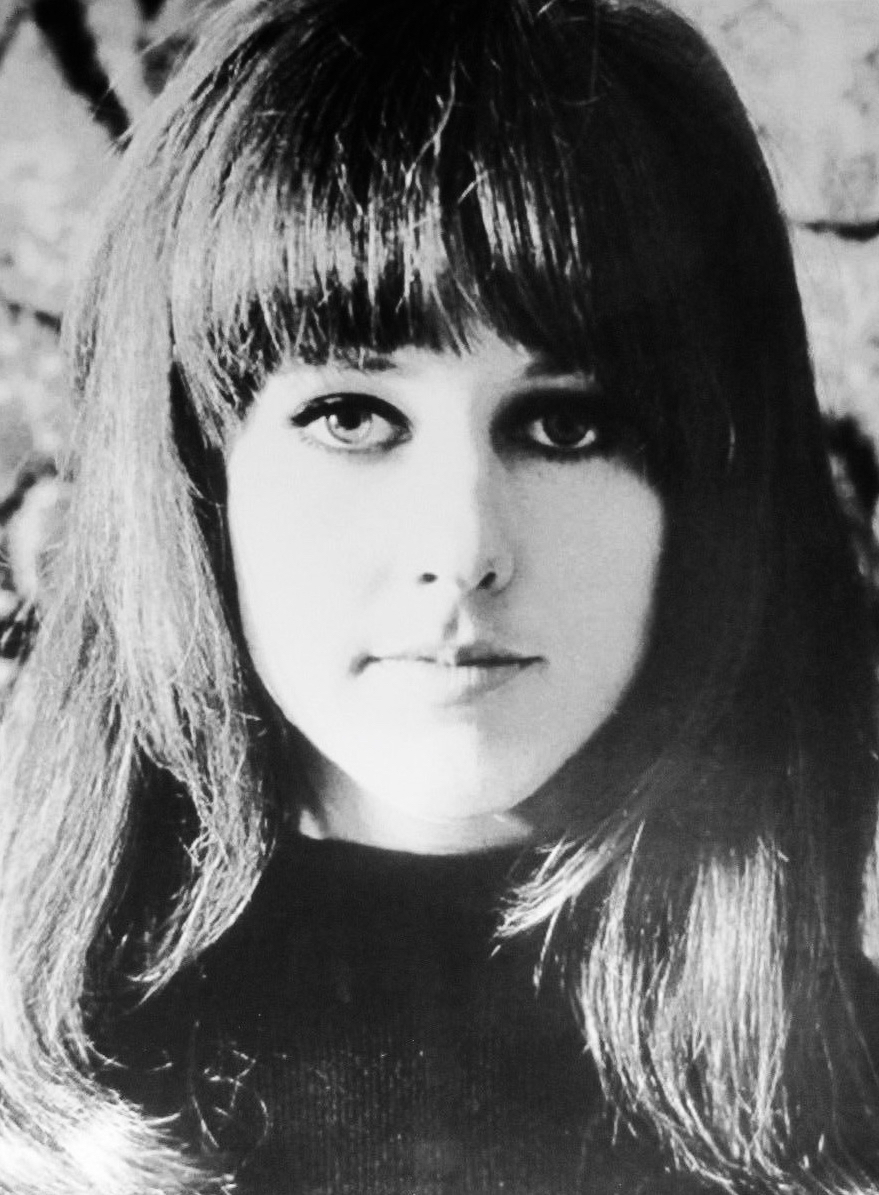

Edit: Wait, fuck, is that one girls?

Given a translator, can you even imagine Leonardo da Vinci and Hideo Kojima in a room together?

93·1 day ago

93·1 day ago“What makes people who’ve spent years studying and practicing law so qualified for and interested in writing and executing federal law? Golly, it’s just such a mystery why one might value a law degree for the person tasked with directing the principal law enforcement agency of the United States.”

The fact that @Lodespawn@aussie.zone had to defend “law degree” as a qualification to lead the FBI (not sufficient unto itself, but just a qualification) shows how insular places like Lemmy are. Just staggeringly asinine. You would be laughed out of the room if you asked this anywhere else.

56·2 days ago

56·2 days agoNope. It comes from Flickr around nine years before being uploaded to Commons. The Commons description “My cat loves styrofoam peanuts” is likely lifted verbatim from the Flickr post, as we tend to do this with other such descriptions.

Cat’s name was Cooper, by the way.

Sextina Aquafina’s distant ancestor.

6·3 days ago

6·3 days agoIs the issue that there are people paying for YouTube Premium?

Okay, so draw on your expertise™ to explain for the class how:

- Fandom wikis are “non-genuine” despite them meeting every criterion for one and despite by far the most prolific wiki in history detailing forward and backward how they are wikis.

- “Powered by MediaWiki” is representative of indie wikis and not of Fandom wikis to anyone who knows anything about either.

You can’t, because both are bullshit, but if you want to act like a clown, here are your unicycle and juggling pins.

19·4 days ago

19·4 days agoBuddy, I don’t like the culture of ragebait that the Internet has fomented either, but this isn’t that. This is an LGBTQ magazine reporting on an influential figure’s intentions to disenfranchise every trans person in the US.

The news in its ideal form exists to tell you about important things that are happening, not to balance itself to make you feel better about the world (and things generally are pretty terrible right now).

The examples of “Young Doctor Wants to Improve Healthcare” or “Environmentalist Wants to Protect Our Forests” are totally nebulous in what they want to achieve, ambiguous in how they plan to achieve it compared to an org that’s been systemically dismantling the US from the inside for over a year, and a type of story that gets reported on all the time when it’s actually consequential.

There is no gatekeeping

Definitionally there is, because you called Fandom wikis “non-genuine wikis”. “genuine wikis provide a much nicer user experience than Fandom wikis”. That’s quintessential gatekeeping, and it’s incorrect gatekeeping at that, because they provably are.

just a statement of a preference, as a user.

We both agree here. You are correct to want to use platforms other than Fandom. Fandom sucks for editors, sucks for readers, and sucks for fan communities; I could write an essay explaining why. I understand the sentiment behind the meme completely; it’s the way it arrives at the sentiment that’s totally nonsensical. You’ve made no coherent point and spread misinformation. You don’t have to make a point to express your opinion, but it’s clear you tried to.

Your comment is like if someone said “You should use a Linux phone to get away from Google and Apple” and you responded with “Android uses Linux”.

I promise as someone extensively familiar with MediaWiki who even administrates an indie wiki that this comparison makes absolutely no sense, and I think you’re reaching to make the OP’s meme make any actual sense when it clearly doesn’t. If we’re reaching into Linux, this is more (still somewhat tenuously) akin to someone telling Ubuntu users that they’re not using “real Linux”. They lack the language to express Ubuntu’s actual problems which really exist and so resort to vacuous gatekeeping instead.

Edit: Actually, the analogy doesn’t even get base enough to describe that. It’s like a meme where Geordi rejects the Ubuntu logo and accepts the Tux logo, and then under that is the OP trying to argue that Ubuntu isn’t a “genuine” operating system.

Buddy, this is a joke.

That, however, does not weaken my point at all.

It invalidates your point. You’re trying to gatekeep what does and does not constitute a “wiki” while clearly having no idea what that word means. And if you knew Fandom uses MediaWiki, it clearly doesn’t show in your meme, because a) Fandom is powered by MediaWiki so it’s not mutually exclusive like Geordi implies, and b) like Fandom, most indie wikis in my experience don’t use the “Powered by MediaWiki” icon either (just in case you’d go with that bizarre, last-ditch non sequitur of an argument). Your meme is nonsense if Fandom uses MediaWiki, which it does.

As for “genuine wiki”, Fandom clearly meets all the points for being one – let alone that it literally uses the de facto wiki software. I’ll let Wikipedia speak for this as an expert witness:

A wiki is a form of hypertext publication ✅ on the internet ✅ which is collaboratively edited ✅ and managed by its audience ✅ directly through a web browser. ✅ A typical wiki contains multiple pages ✅ that can either be edited by the public ✅ or limited to use within an organization for maintaining its internal knowledge base. Wikis are powered by wiki software, ✅ also known as wiki engines. Being a form of content management system, these differ from other web-based systems such as blog software or static site generators in that the content is created without any defined owner or leader. ✅ Wikis have little inherent structure, ✅ allowing one to emerge according to the needs of the users. ✅ Wiki engines usually allow content to be written using a lightweight markup language ✅ and sometimes edited with the help of a rich-text editor. ✅

It doesn’t seem like you’re “well aware” of anything you’re talking about except the general (correct) sentiment that Fandom sucks. Fandom sucks for so many reasons that it’s legitimately impressive you managed to miss all of them while making this meme. I was being earnestly polite when you told me to touch grass, so since even that is apparently escalatory, I’ll just… not.

Uhhh… OP, Fandom uses MediaWiki. Fandom is terrible for other reasons, and not using MediaWiki anyway wouldn’t make you a “non-genuine wiki”.

There’s a good browser extension called Indie Wiki Buddy that helps redirect you from Fandom to independent fan wikis, which in my experience are almost always better.

It’s a reference to people who like to ignore discussion of contentious issues by saying they’re not really into politics while failing to realize or care that literally every single aspect of their modern life is directly intertwined with politics.

67·4 days ago

67·4 days agoEvery article I read about Intel makes me more thankful that I got a Ryzen 1700 in 2017 and never looked back.

I mean I’ve read that the giant marine isopod Bathynomus giganteus is popular in Vietnam, so probably – although there’s probably a good reason beyond scarcity that it’s not a widely popular delicacy. I might be concerned about bioaccumulated heavy metals in terrestrial ones, they’d be highly inefficient to prepare, and I’ve never heard of any culture that eats them. But I’m sure it’d be doable. Just to what end, you know?